AI Observability: Using Machine Learning for Proactive Issue Resolution

In today's fast-paced digital world, Artificial Intelligence (AI) and Machine Learning (ML) models are no longer confined to research labs; they are the engine driving critical business functions, from personalized customer experiences to fraud detection. But as AI systems become more complex, operating in unpredictable real-world environments, a new challenge has emerged: how do we ensure they remain reliable, accurate, and fair?

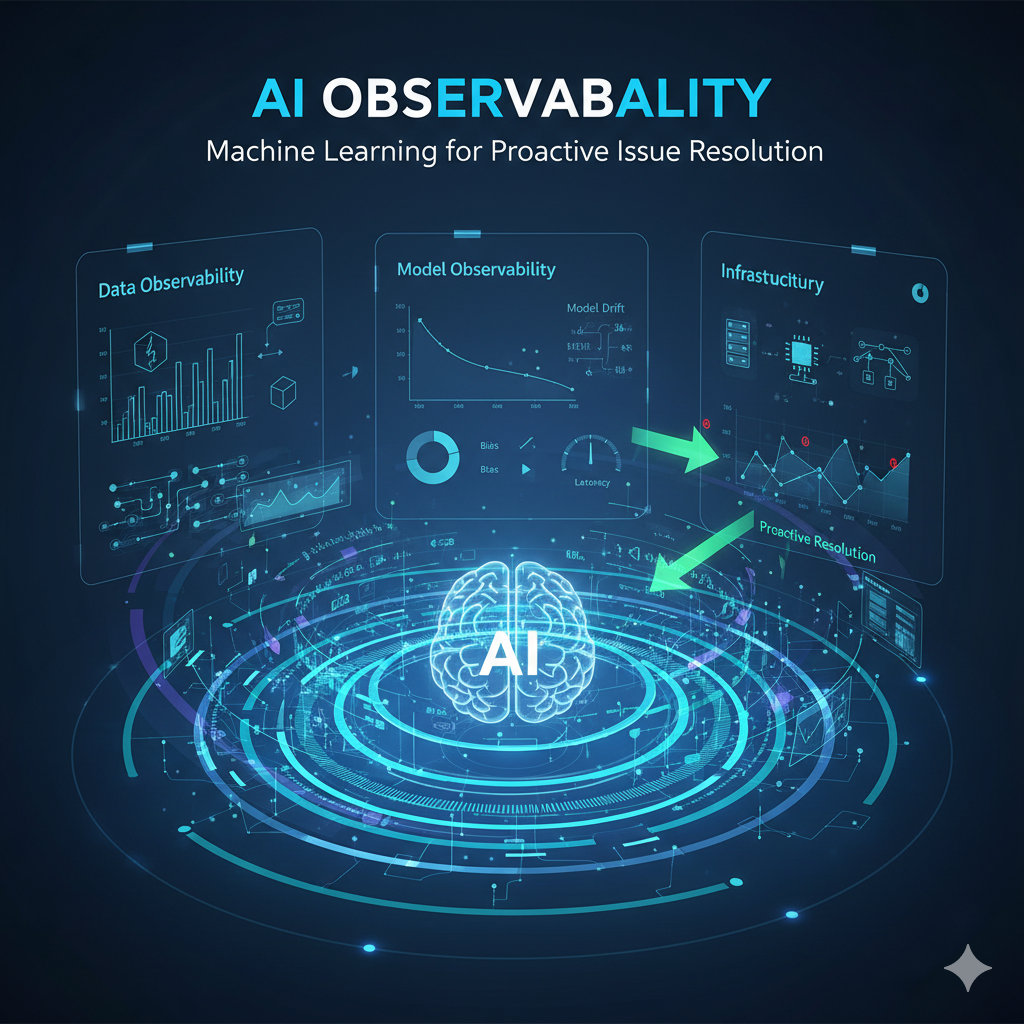

The answer is AI Observability, a sophisticated practice that moves beyond traditional monitoring to offer deep, end-to-end visibility into the internal state and performance of AI systems. And the secret weapon in this practice? The very technology it's designed to monitor: Machine Learning for proactive issue resolution.

What is AI Observability?

Observability, in general, is the ability to understand a system's internal state by examining its external outputs: metrics, logs, and traces (MELT). AI Observability applies this principle to AI and ML systems, but with a critical difference: it must account for the non-deterministic, probabilistic nature of machine learning models.

AI Observability is typically broken down into three critical pillars:

- Data Observability: Monitoring the data that feeds the model. This includes tracking quality (e.g., missing values, schema changes) and distribution shifts, known as Data Drift. If the real-world data starts to look different from the training data, the model's predictions will degrade.

- Model Observability: Tracking the model's actual performance and behavior in production. Key metrics include Model Drift (the decline in predictive accuracy), latency, throughput, bias, and fairness metrics.

- Infrastructure Observability: Ensuring the underlying computing resources—like GPUs, memory, and network—are operating efficiently and not creating bottlenecks.

While traditional monitoring tells you if a model crashed, AI observability aims to tell you why its performance is degrading and, crucially, to predict when it's about to fail.

The Power of Machine Learning in Observability

The sheer volume and complexity of telemetry data generated by modern AI systems make it impossible for humans to analyze manually. This is where machine learning shines, transforming the reactive process of debugging into a proactive system of prevention.

By applying ML algorithms to the observability data (logs, metrics, and traces), we unlock capabilities that are essential for preemptive maintenance:

1. Automated Anomaly Detection

Static thresholds ("Alert if CPU usage is over 90%") are ineffective for dynamic AI systems. ML models, such as those based on statistical techniques or clustering, can:

- Learn Normal Behavior: They continuously analyze historical patterns to build a dynamic baseline for what "normal" looks like for your specific model, accounting for daily, weekly, and seasonal variations.

- Identify Subtle Deviations: They can spot minute, non-obvious deviations from this baseline across multiple correlated metrics (e.g., a slight increase in latency combined with a small change in a feature distribution) that a human or static rule would miss.

- Reduce Alert Fatigue: By filtering out known, non-critical noise, ML significantly reduces false positives, allowing teams to focus only on genuinely critical issues.

2. Predictive Analytics and Forecasting

One of the greatest benefits of using ML in observability is its ability to forecast the future state of the system.

- Forecasting Model Drift: By analyzing trends in data quality and model performance metrics, ML can predict when a model's accuracy is likely to fall below an acceptable Service Level Objective (SLO), giving the Data Science team days or even weeks to schedule a retraining cycle.

- Resource Prediction: ML can forecast future resource consumption (e.g., storage, compute capacity) based on expected user load and model usage patterns, helping SRE and DevOps teams proactively scale infrastructure and avoid over-provisioning or service disruption.

3. Smart Root Cause Analysis (RCA) and Correlation

When an issue does occur, ML accelerates the time-to-resolution (MTTR) by automatically pinpointing the source.

- Correlating Signals: ML algorithms can automatically correlate events across the entire stack—from a code change in a deployment log, to an increase in model inference latency, to an error spike in a specific service trace—to suggest the probable root cause.

- Log Clustering: Generative AI and natural language processing (NLP) techniques can automatically cluster millions of unstructured log lines into a few distinct patterns, quickly highlighting new or rare log messages that are often the symptom of a novel issue.

Best Practices for Proactive AI Observability

Implementing an ML-powered observability strategy requires a robust, integrated approach.

| Best Practice | Description | Proactive Impact |

| Integrate Early (Shift Left) | Embed observability instrumentation from the initial development and training phase into your MLOps pipeline, not just in production. | Establishes a true performance baseline and catches issues before they are deployed. |

| Track End-to-End Lineage | Maintain an audit trail of data inputs, preprocessing steps, model versions, and deployment configurations for every prediction. | Enables rapid rollback and full explainability/audit compliance when a model makes a bad decision. |

| Automate Alerting and Remediation | Use ML-driven anomaly detection to trigger alerts, and integrate these alerts with automated remediation workflows (e.g., auto-scaling infrastructure, triggering an emergency model re-train). | Reduces human intervention, minimizing mean time to detect (MTTD) and mean time to repair (MTTR). |

| Prioritize Explainability | Use techniques like SHAP or LIME to understand why a model made a specific prediction. Log the features and decision paths for high-stakes predictions. | Helps debug model drift, identifies hidden biases, and builds user trust by making AI accountable. |

| Focus on Business Impact Metrics | Link technical observability metrics (latency, accuracy) to real-world business outcomes (conversion rates, lost revenue, customer churn). | Ensures the observability strategy is aligned with business value and prioritizes the most costly issues. |

Conclusion: The Future is Proactive

The journey from simply monitoring an AI model to having a truly observable system is transformative. By leveraging the power of machine learning, organizations can move past reactive troubleshooting. AI Observability is the key to creating self-healing, resilient, and trustworthy AI systems that operate with peak performance and minimal downtime.

In the age of AI, success won't just depend on building the best models, but on ensuring they are reliable, and that starts with the ability to look inside the black box and proactively resolve issues before they ever impact a customer.