How to Implement Real-Time Features (Chat, Notifications) Without Killing Performance

Adding real-time features like chat or notifications can significantly enhance user engagement, but they can also strain your servers if not implemented efficiently. Here’s how to keep your app fast and scalable while delivering real-time updates.

I. Architectural Considerations

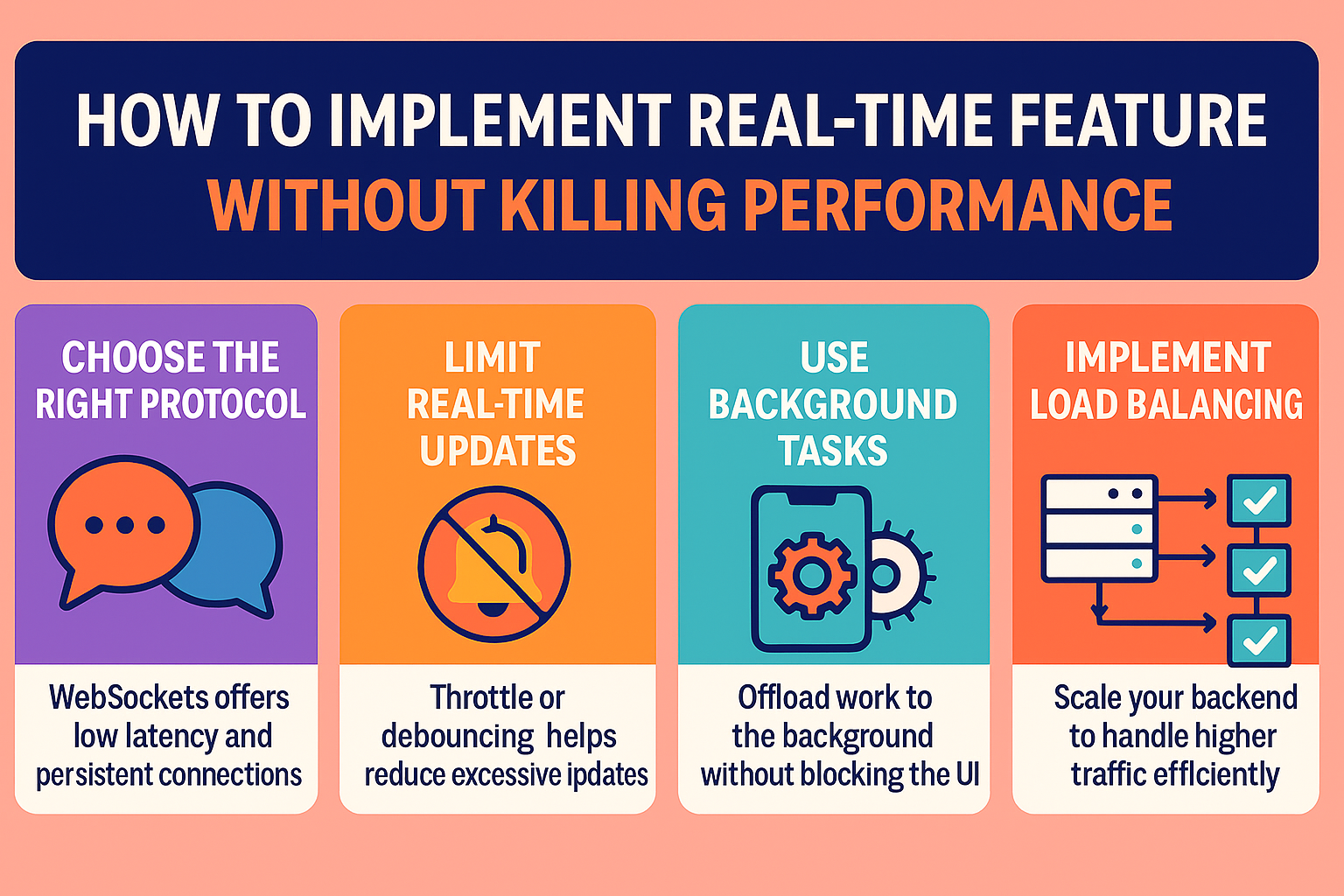

1. Choose the Right Communication Protocol:

- WebSockets: This is the gold standard for real-time applications. WebSockets provide a persistent, full-duplex communication channel between the client and server, minimizing overhead compared to traditional HTTP polling. This is ideal for chat and instant notifications.

- Server-Sent Events (SSE): For one-way communication (server to client), SSE can be a simpler alternative to WebSockets. It's suitable for notifications where the client doesn't need to send frequent messages back to the server.

- Long Polling (less recommended for new projects): While historically used, long polling is less efficient than WebSockets or SSE due to the overhead of opening and closing connections. Only consider if WebSockets/SSE are not viable.

2. Scalable Backend Architecture:

- Microservices: Break down your application into smaller, independent services. This allows you to scale specific real-time components (e.g., chat service, notification service) independently based on demand.

- Message Queues/Brokers (e.g., RabbitMQ, Kafka, Redis Pub/Sub): These are crucial for decoupling services and handling asynchronous communication.

- i. Chat: When a user sends a message, it goes into a message queue. The chat service picks it up, processes it, and publishes it to relevant subscribers (other chat participants).

- ii. Notifications: When an event triggers a notification, it's published to a queue. The notification service consumes it and pushes it to the relevant users.

- Load Balancers: Distribute incoming real-time connections across multiple server instances to prevent any single server from becoming a bottleneck.

- Stateless Servers: Design your real-time servers to be stateless. This makes horizontal scaling much easier as you can add or remove server instances without affecting ongoing connections. Session information should be stored externally (e.g., in a distributed cache).

3. Database Selection:

- NoSQL Databases (e.g., MongoDB, Cassandra, Redis): Often preferred for real-time features due to their ability to handle high write/read volumes and flexible schemas.

- i. Chat: Store chat messages in a NoSQL database for fast retrieval and scalability.Chat: Store chat messages in a NoSQL database for fast retrieval and scalability.

- ii. Notifications: Store notification preferences, read status, and history.

- In-Memory Data Stores (e.g., Redis, Memcached): Excellent for caching frequently accessed data (e.g., user presence, unread message counts) to reduce database load. Redis Pub/Sub is also a great option for real-time messaging.

II. Technology Stack Choices

1. Backend Languages/Frameworks:

- Node.js (with Socket.IO/ws): Extremely popular for real-time applications due to its asynchronous, event-driven nature. Socket.IO provides a robust abstraction over WebSockets with fallback mechanisms.

- Python (with FastAPI/Flask and WebSockets libraries like websockets): Python is a strong contender, especially with frameworks like FastAPI which offer excellent performance for async operations.

- Java (with Spring WebFlux/Undertow): While traditionally known for enterprise applications, modern Java frameworks offer excellent async capabilities for real-time.

2. Frontend Libraries/Frameworks:

- React, Angular, Vue.js: These frameworks provide efficient ways to manage UI updates in response to real-time data.

- Socket.IO Client Library: Pairs perfectly with a Socket.IO backend.

- Native WebSocket API: For simpler implementations or when direct WebSocket control is desired.

III. Performance Optimization Strategies

1. Efficient Data Transfer:

- Minimize Payload Size: Send only essential data. Use efficient serialization formats like JSON or Protocol Buffers.

- Compression: Enable gzip compression for WebSocket messages if supported by your infrastructure and clients.

- Batching/Debouncing: For notifications, batch multiple updates into a single message to reduce network overhead. Debounce chat typing indicators.

2. Connection Management:

- Heartbeats/Keepalives: Implement heartbeats to detect dead connections and prevent orphaned connections from consuming resources.

- Connection Pooling: On the server-side, effectively manage database and other external service connections to minimize overhead.

- Graceful Disconnection: Handle client disconnects gracefully to free up server resources promptly.Graceful Disconnection: Handle client disconnects gracefully to free up server resources promptly.

3. Scalability and Resource Management:

- Horizontal Scaling: Add more server instances as user load increases.

- Vertical Scaling: Upgrade server resources (CPU, RAM) for individual instances if needed, but horizontal scaling is generally preferred for real-time.

- Resource Throttling: Implement limits on the number of messages a user can send within a timeframe to prevent abuse and resource exhaustion.

- Connection Limits: Set maximum connection limits per server to prevent overloading.

4. Client-Side Optimizations:

- Efficient UI Rendering: Optimize your frontend to efficiently render and update UI elements without causing performance bottlenecks. Use virtualized lists for long chat histories.

- Local Caching: Cache frequently accessed data on the client-side to reduce server round trips.

- Error Handling and Reconnection Logic: Implement robust error handling and automatic reconnection logic for WebSockets to provide a seamless user experience.

5. Monitoring and Alerting:

- Real-time Metrics: Monitor key metrics like active connections, message throughput, latency, CPU usage, memory consumption, and error rates.

- Alerting: Set up alerts for anomalies or threshold breaches to proactively address performance issues.

- Distributed Tracing: Use tools like OpenTelemetry or Jaeger to trace requests across your microservices to identify bottlenecks.

IV. Specific Real-Time Feature Implementations

Chat:

- User Presence: Use Redis or an in-memory store to track user online/offline status.

- Typing Indicators: Send lightweight WebSocket messages for typing events, debouncing them on the client.

- Read Receipts: Store read status in the database and push updates via WebSockets.

- Message History: Fetch historical messages from the database when a chat window is opened.

- WebRTC (for voice/video calls): Integrate WebRTC for more advanced communication features.

Notifications:

- Notification Types: Categorize notifications (e.g., new message, friend request, system alert).

- Push Notifications (for mobile): Integrate with APNs (Apple) or FCM (Firebase) for native mobile push notifications when the app is in the background.

- In-App Notifications: Display notifications directly within the application using WebSockets/SSE.

- User Preferences: Allow users to customize notification preferences (e.g., sound, vibration, email).

- Batching and Digest Notifications: For less critical notifications, consider batching them into a daily/weekly digest.

V. Testing and Continuous Improvement

- Load Testing: Simulate high user loads to identify bottlenecks and test scalability.

- Stress Testing: Push your system beyond its normal operating limits to understand its breaking point.

- End-to-End Testing: Ensure the entire real-time flow works correctly from client to server and back.

- A/B Testing: Experiment with different optimization strategies to see what works best for your specific use case.

- Regular Code Reviews and Profiling: Continuously review code for potential performance issues and use profiling tools to identify hot spots.