Explainable AI (XAI) and Trustworthy Decision Systems: Making AI’s Choices Transparent and Reliable

Explainable AI (XAI) is flipping the script on how we build, use, and trust AI systems. As these models get more advanced and start running critical parts of our world, being able to actually understand how they make decisions isn’t just a nice-to-have anymore—it’s essential if we want responsible AI that people can trust.

What Exactly is Explainable AI?

At its core, XAI is all about making AI decisions understandable to humans. Traditional models can be “black boxes”—they work well but don’t show their reasoning. With XAI, we’re peeling back that curtain, showing what factors influenced the outcome, how the system reached a decision, and why it picked one option over another.

There are a few layers to keep straight here:

- Explainability → breaking down AI decisions into plain language so humans get the “why.”

- Interpretability → going deeper into the guts of the model, looking at how inputs become outputs.

- Transparency → the bigger picture: making the system’s data, processes, and design openly understandable.

Together, these give us a framework for AI systems that we can actually trust instead of just hoping they’re right.

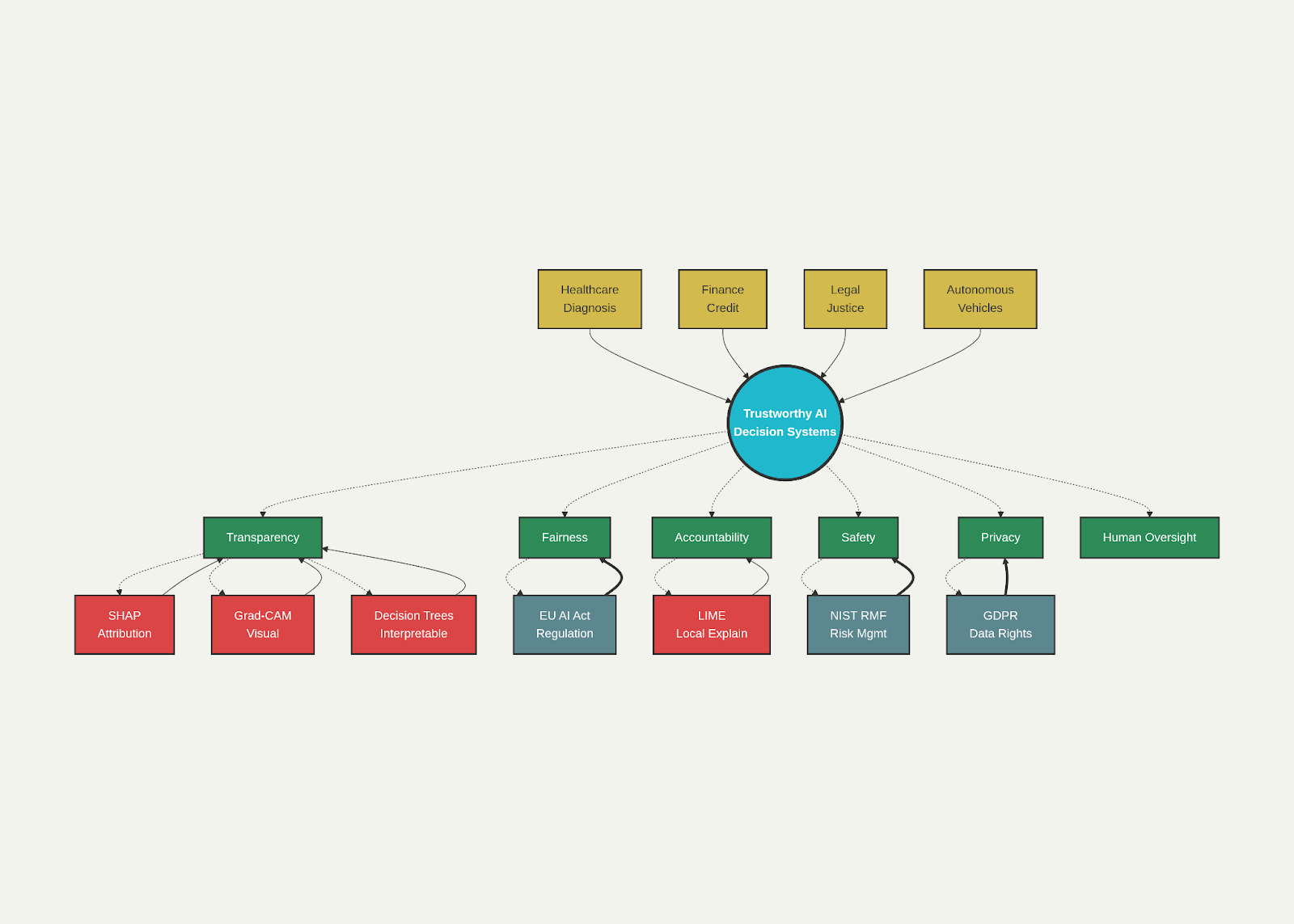

Comprehensive Framework: Trustworthy AI and Explainable AI Ecosystem

Building Trustworthy AI: The XAI Toolkit

Model-Agnostic Methods

These work with any AI system, no matter the architecture. They basically poke at the model, test how it reacts, and pull together explanations.

- SHAP (SHapley Additive ex-Planations): Uses game theory to figure out how much each feature contributes to a decision. Think of each input feature as a “player” in a game where the prize is the model’s prediction. SHAP assigns credit to each one.

- LIME (Local Interpretable Model-agnostic Explanations): Builds a simpler, human-friendly model around a single prediction by testing how small tweaks to the data change the outcome.

- Grad-CAM (Gradient-weighted Class Activation Mapping): Perfect for image models. It highlights the parts of an image that most influenced the model’s decision, basically showing where the AI was “looking.”

Together, these tools let you zoom in and out—local explanations, global feature importance, or visual cues—depending on what you need.

Models That Are Interpretable by Design

Sometimes it’s easier to just build models that are transparent from the start.

- Decision trees: You can trace the logic step by step.

- Linear regression: Easy to interpret coefficients when the feature set is small.

- Rule-based systems: If-then rules humans can read and validate.

- RuleFit: A combo of rule-based logic and regression that balances accuracy with clarity.

Hybrid Approaches

The real power often comes from mixing techniques. For example, in medical imaging, combining SHAP, LIME, and Grad-CAM boosted accuracy from 97.2% to 99.4% while making explanations clearer for different audiences.

Regulations and Compliance: The Legal Side of XAI

GDPR and Transparency Rules

The EU’s GDPR gives people rights around automated decision-making. Article 22, for example, says individuals can’t be subject to fully automated decisions that have major impacts on their lives without oversight.

That means AI systems need to:

- Be lawful, fair, and transparent in how they use data.

- Limit use of data to the original purpose (no reusing it for random AI experiments).

- Keep datasets lean (tricky, since AI usually likes big data).

- Provide explanations for automated decisions.

The EU AI Act

Starting in 2025, the EU AI Act is setting the bar as the first major AI-specific regulation. It puts systems into four categories: prohibited, high-risk, limited risk, and minimal risk.

High-risk systems (like in healthcare, finance, transport, policing) have the strictest requirements: audit trails, bias checks, human oversight, documentation—you name it.

And since it stacks with GDPR, companies working in Europe basically face a double set of responsibilities.

NIST AI Risk Management Framework

In the U.S., the NIST AI RMF is less about strict laws and more about best practices. It lays out four main steps:

- Govern – set up oversight structures.

- Map – understand risks.

- Measure – check system performance.

- Manage – address issues.

Organizations use this framework to keep AI safe and reliable without losing flexibility.

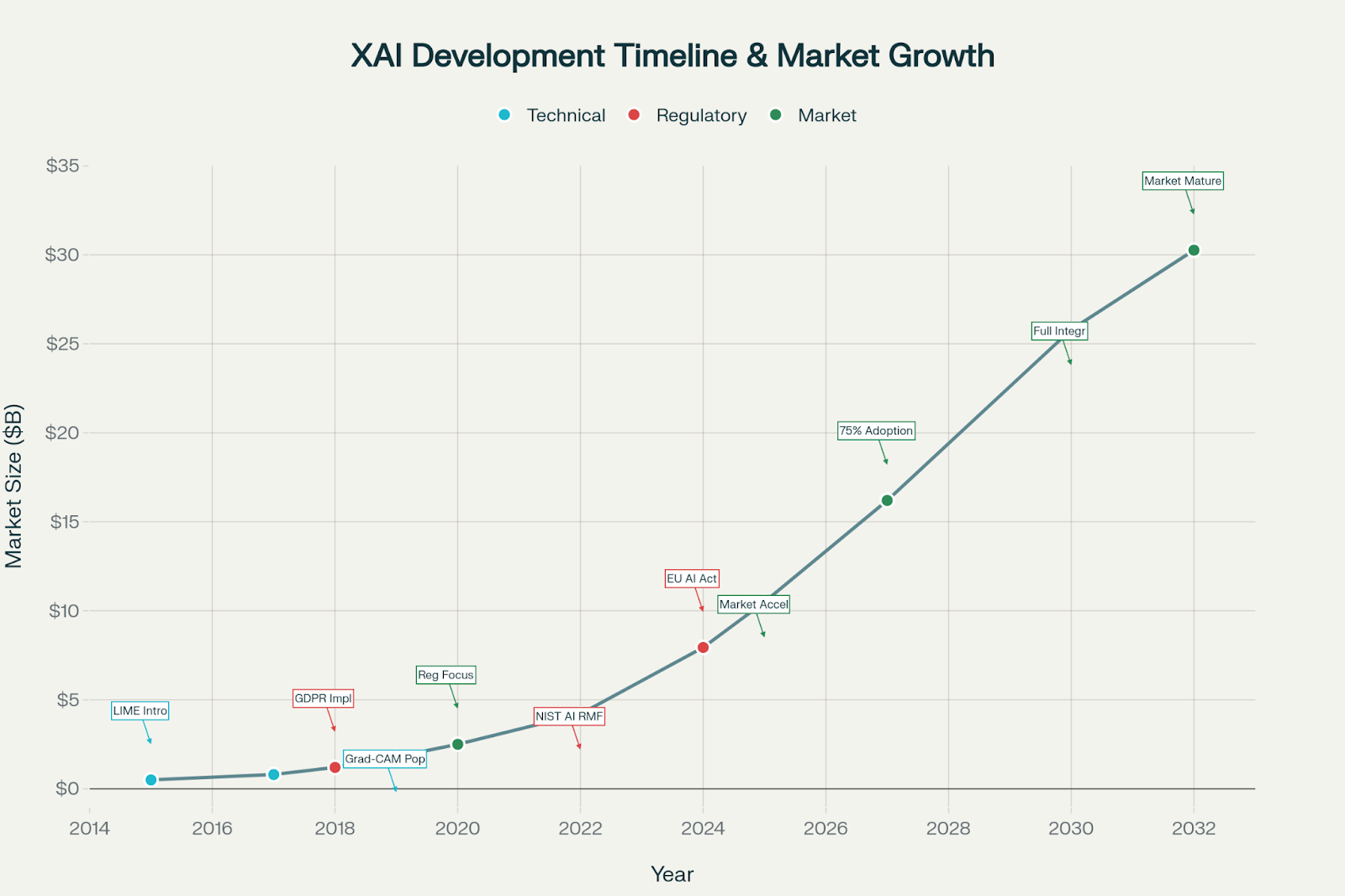

Evolution of Explainable AI: Key Milestones and Market Growth (2015-2032)

Where XAI is Already Making an Impact

Healthcare

Transparency in medical AI is crucial—lives are on the line. XAI helps doctors understand why an algorithm flagged an image or recommended a treatment, making it easier to trust the system.

Applications include:

- Imaging: Explaining what abnormalities were spotted.

- Diagnosis: Offering reasons behind AI suggestions.

- Clinical documentation: Making sure automated transcription/coding is transparent and auditable.

- Drug discovery: Helping researchers understand molecular predictions.

- Risk stratification: Explaining why certain patients are high-risk so doctors can discuss it clearly.

Finance

Here, the rules are strict, so explainability is non-negotiable.

- Credit scoring: Customers can see why their loan was denied and how to improve.

- Fraud detection: Investigators understand flagged transactions, reducing false alarms.

- Trading: Decisions need to be traceable for compliance.

- Insurance: Premiums and claims decisions must be explainable to maintain trust.

Transportation and Autonomous Systems

Self-driving cars and traffic AI need explanations in real-time for safety.

- Autonomous vehicles: Justifying navigation choices in critical moments.

- Traffic systems: Showing why certain optimizations were made.

- Predictive maintenance: Explaining why a part needs to be replaced before it fails.

Challenges and Roadblocks

Balancing Accuracy with Clarity

The better the model (like deep neural nets), the harder it is to interpret. But simpler models don’t always capture the complexity needed. It’s a constant trade-off.

Performance Costs

Generating explanations can slow things down by 20–40%. For real-time applications, that’s a problem.

Scaling Issues

Methods like LIME are too heavy for massive systems making millions of decisions daily. More efficient techniques are needed.

Data Bias

If your data is biased, your explanations will be biased too. That means organizations must constantly check for fairness and ensure explanations don’t reinforce harmful patterns.

Regulatory Complexity

For global companies, it’s a juggling act: GDPR, EU AI Act, NIST, and local laws all overlap. Add in strict audit trail requirements, and compliance gets complicated fast.

How Organizations Are Tackling These Issues

- Hybrid models: Use interpretable models where decisions matter most, and more complex ones where accuracy is critical.

- Automated explanation tools: Platforms are making XAI plug-and-play, so teams don’t need PhD-level knowledge to apply it.

- Stakeholder-specific explanations: Tailoring explanations for different audiences—technical teams, business leaders, or customers—so everyone gets the right level of detail.

The Road Ahead

Next-Gen XAI Tech

- Neuro-symbolic AI: Blends neural networks with symbolic reasoning, hitting deep learning accuracy but with human-readable logic.

- Causal discovery: Finds cause-effect relationships instead of just correlations, speeding up explanations dramatically.

- Foundation model interpretability: Building “interpreter heads” into massive language models so they can explain how they reached an answer.

Making XAI Accessible

- Cloud XAI platforms: Major providers already support explainability for hundreds of models.

- No-code solutions: Business users can generate explanations without coding, opening the door to non-technical teams.

Market Growth

The XAI market is booming—from $7.94B in 2024 to $30.26B by 2032 (18.2% CAGR). By 2027, 75% of organizations are expected to use XAI. Companies that embrace it are predicted to see a 30% higher ROI compared to those relying on black-box AI.

Conclusion

Explainable AI (XAI) is no longer a luxury—it is the foundation of trustworthy, responsible, and human-centered AI. As artificial intelligence becomes deeply embedded in critical sectors like healthcare, finance, governance, and transportation, the ability to understand and validate AI decisions will determine not just compliance with regulations, but also long-term user trust and adoption.

The path forward lies in balancing accuracy with interpretability, adopting hybrid models, and tailoring explanations for diverse stakeholders. With evolving global and national regulatory frameworks, organizations that invest in XAI early will gain a strategic advantage, ensuring both accountability and innovation.

Ultimately, XAI is shaping the future of AI itself—transforming it from opaque “black boxes” into transparent, reliable, and inclusive systems that people can trust. In the years ahead, explainability will not just be an add-on, but the default expectation for every AI system deployed in society.